IJCAI 2021

Instance-Aware Coherent Video Style

Transfer for Chinese Ink Wash Painting

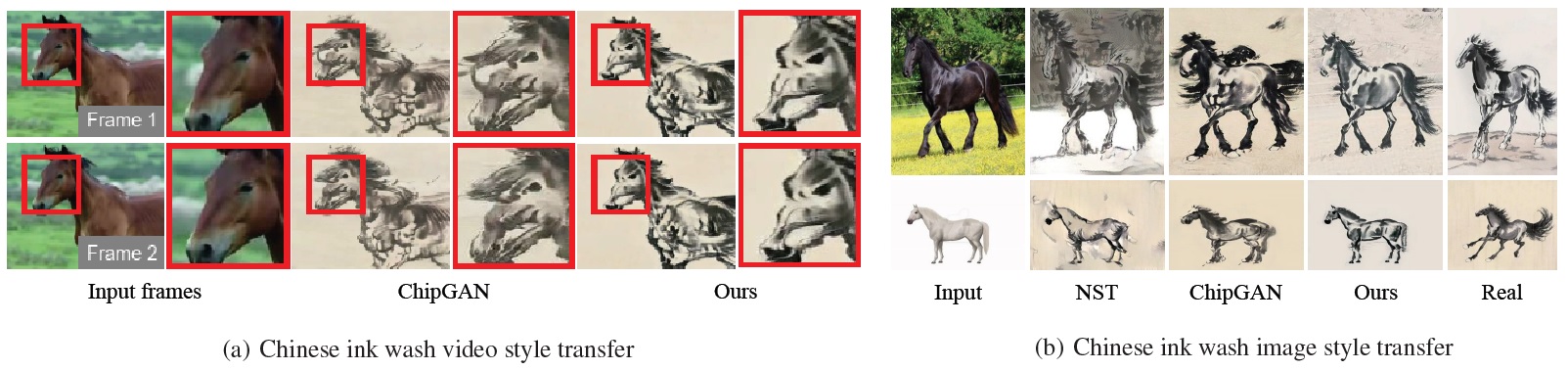

Our model is able to produce temporally stable Chinese ink wash videos. Moreover, our model can render the brush strokes with tonality, shading, and size adaptive to the image content and leave proper white spaces, better capturing the freehand brushstroke characteristics compared to existing methods.

Abstract

Recent researches have made remarkable achievements in fast video style transfer based on western paintings. However, due to the inherent different drawing techniques and aesthetic expressions of Chinese ink wash painting, existing methods either achieve poor temporal consistency or fail to transfer the key freehand brushstroke characteristics of Chinese ink wash painting. In this paper, we present a novel video style transfer framework for Chinese ink wash paintings. The two key ideas are a multi-frame fusion for temporal coherence and an instance-aware style transfer. The frame reordering and stylization based on reference frame fusion are proposed to improve temporal consistency. Meanwhile, the proposed method is able to adaptively leave the white spaces in the background and to select proper scale to extract feature and depict the foreground subject by leveraging instance segmentation. Experimental results demonstrate the superiority of the proposed method over state-of-the-arts in terms of both temporal coherence and visual quality.

Method

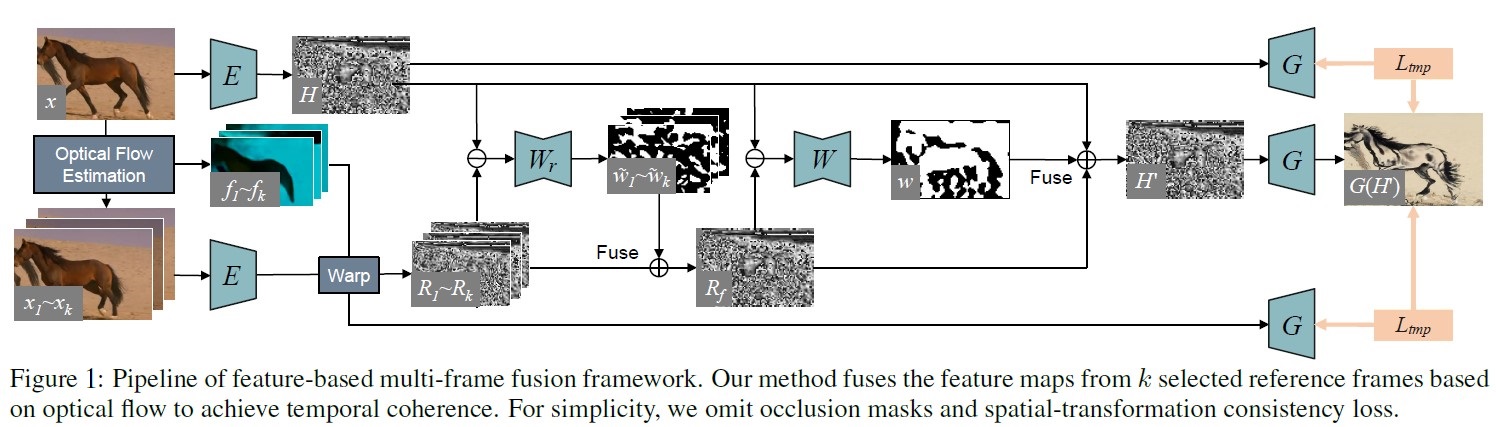

Video Style Transfer: We proposed a multi-frame fusion method to guide the generation of ink frames. These feature maps of reference frames will be aligned to current frame according to optical flow. Then they will fuse to one reference map, and this reference map will be used to correct the current frame's feature map according to a mask.

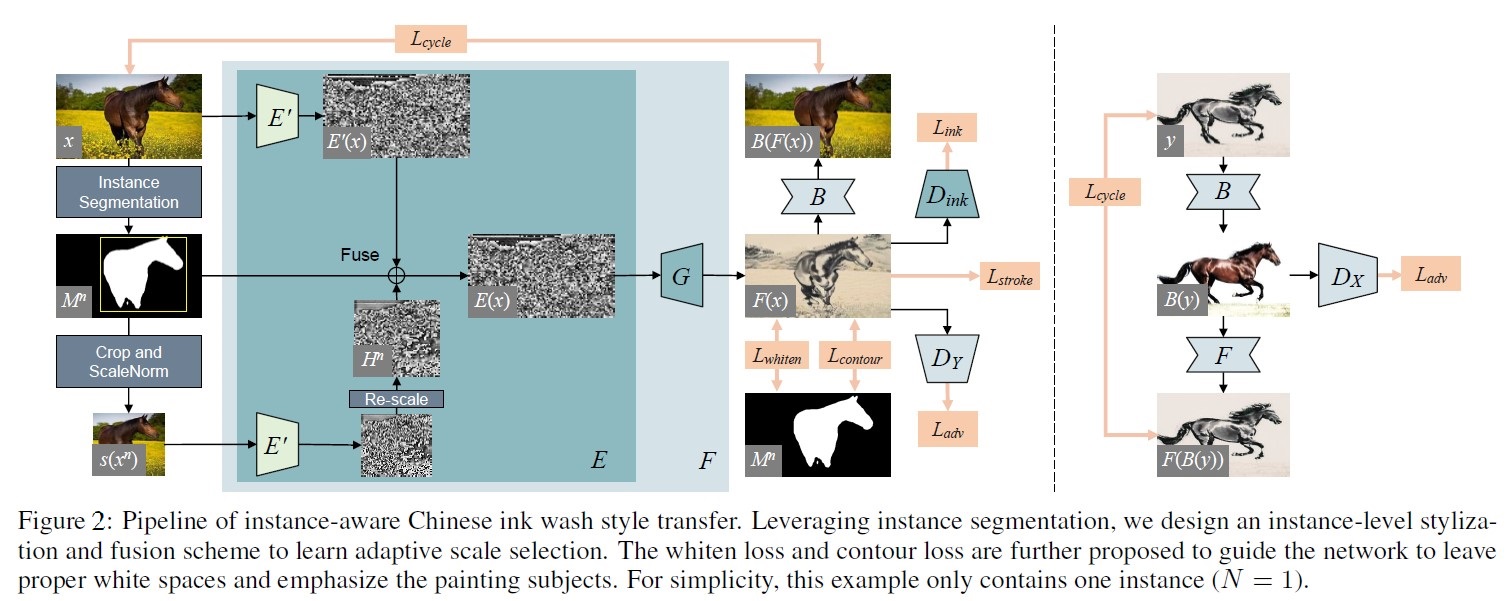

Image Ink Wash Style Transfer: We introduce instance segmentation in the generation process. We improve the white space and contour strokes through a whiten loss and a contour loss based on instance segmentation. We also proposed a adaptive scale method to adjust different subjects in input image and help the model extract features better.

Selected Experimental Results

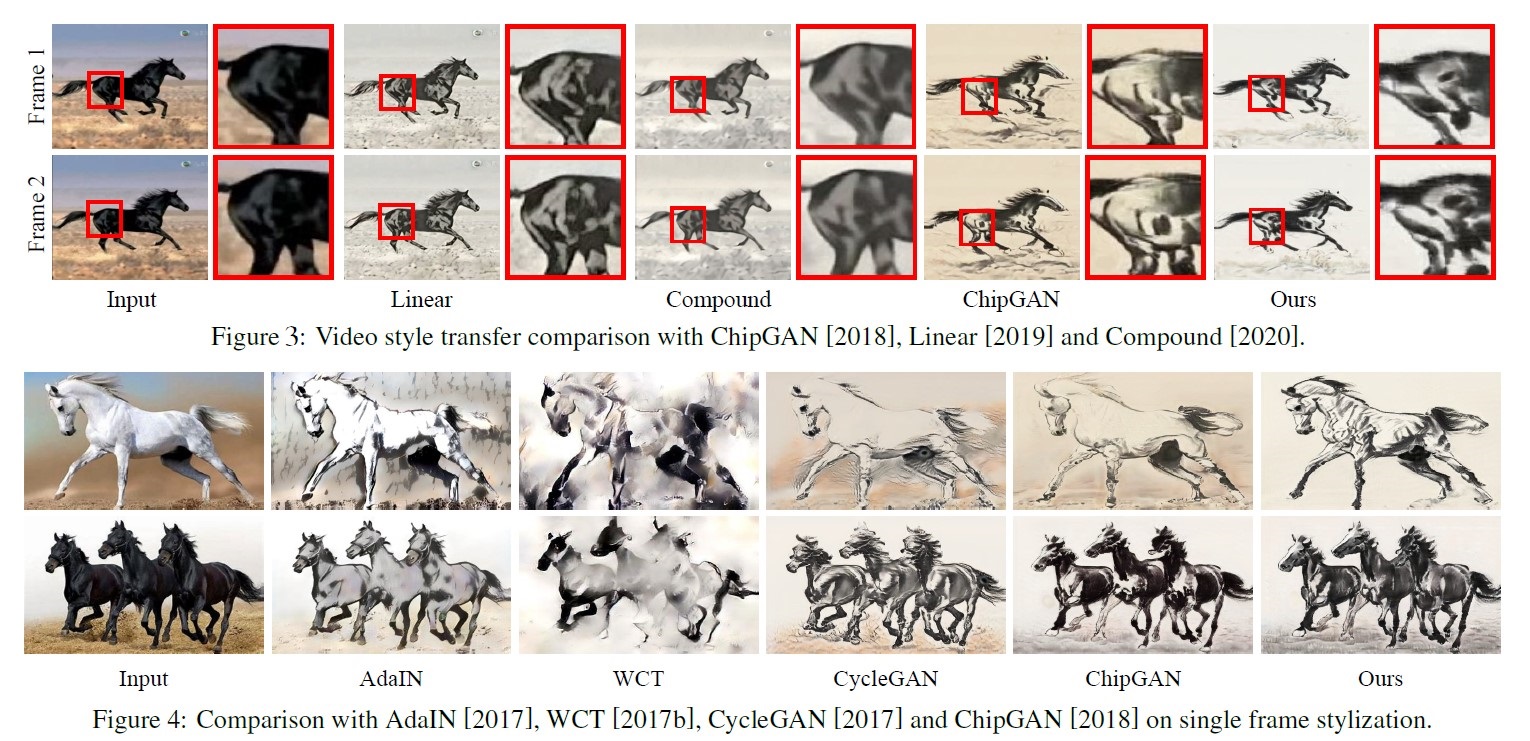

Image results compared with other image style transfer methods (AdaIN, WCT, ChipGAN, CycleGAN) and video style transfer methods (Linear, Compound).

Video results compared with ChipGAN and some video style transfer methods (Linear, Compound).

Video results on different object and different style.

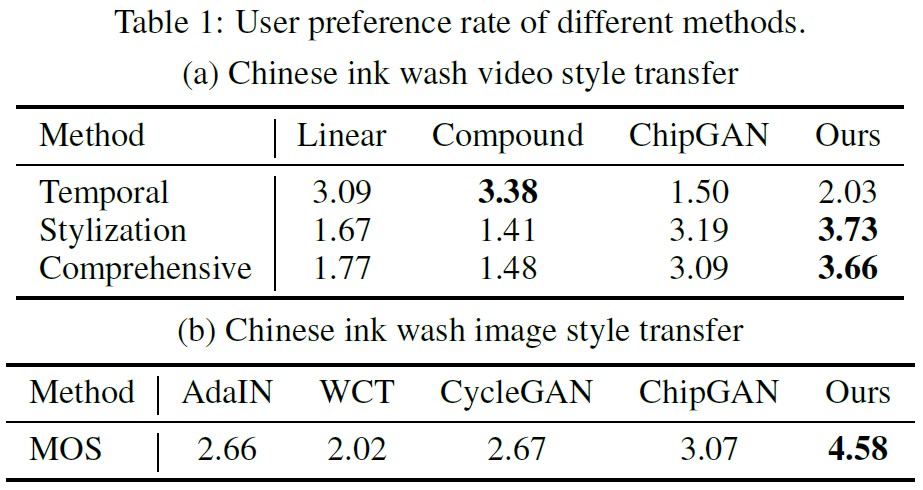

User Study for three aspects: temporal consistency, ink wash style and comprehensive effect.

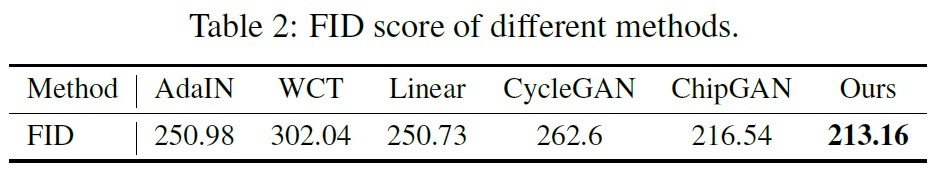

FID scores as quantitative experiment. We compared with AdaIN, WCT, Linear, CycleGAN and ChipGAN.

Resources

- Paper: IJCAI 2021

- Code: Github